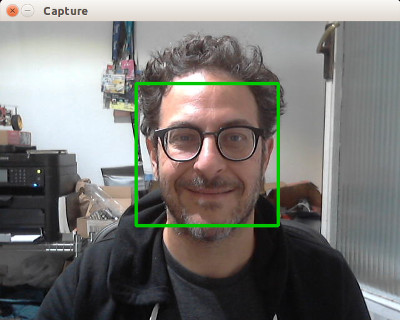

Display camera frame

This commit is contained in:

28

vendor/gocv.io/x/gocv/.astylerc

generated

vendored

Normal file

28

vendor/gocv.io/x/gocv/.astylerc

generated

vendored

Normal file

@ -0,0 +1,28 @@

|

||||

--lineend=linux

|

||||

|

||||

--style=google

|

||||

|

||||

--indent=spaces=4

|

||||

--indent-col1-comments

|

||||

--convert-tabs

|

||||

|

||||

--attach-return-type

|

||||

--attach-namespaces

|

||||

--attach-classes

|

||||

--attach-inlines

|

||||

|

||||

--add-brackets

|

||||

--add-braces

|

||||

|

||||

--align-pointer=type

|

||||

--align-reference=type

|

||||

|

||||

--max-code-length=100

|

||||

--break-after-logical

|

||||

|

||||

--pad-comma

|

||||

--pad-oper

|

||||

--unpad-paren

|

||||

|

||||

--break-blocks

|

||||

--pad-header

|

||||

1

vendor/gocv.io/x/gocv/.dockerignore

generated

vendored

Normal file

1

vendor/gocv.io/x/gocv/.dockerignore

generated

vendored

Normal file

@ -0,0 +1 @@

|

||||

**

|

||||

11

vendor/gocv.io/x/gocv/.gitignore

generated

vendored

Normal file

11

vendor/gocv.io/x/gocv/.gitignore

generated

vendored

Normal file

@ -0,0 +1,11 @@

|

||||

profile.cov

|

||||

count.out

|

||||

*.swp

|

||||

*.snap

|

||||

/parts

|

||||

/prime

|

||||

/stage

|

||||

.vscode/

|

||||

/build

|

||||

.idea/

|

||||

contrib/data.yaml

|

||||

60

vendor/gocv.io/x/gocv/.travis.yml

generated

vendored

Normal file

60

vendor/gocv.io/x/gocv/.travis.yml

generated

vendored

Normal file

@ -0,0 +1,60 @@

|

||||

# Use new container infrastructure to enable caching

|

||||

sudo: required

|

||||

dist: trusty

|

||||

|

||||

# language is go

|

||||

language: go

|

||||

go:

|

||||

- "1.13"

|

||||

go_import_path: gocv.io/x/gocv

|

||||

|

||||

addons:

|

||||

apt:

|

||||

packages:

|

||||

- libgmp-dev

|

||||

- build-essential

|

||||

- cmake

|

||||

- git

|

||||

- libgtk2.0-dev

|

||||

- pkg-config

|

||||

- libavcodec-dev

|

||||

- libavformat-dev

|

||||

- libswscale-dev

|

||||

- libtbb2

|

||||

- libtbb-dev

|

||||

- libjpeg-dev

|

||||

- libpng-dev

|

||||

- libtiff-dev

|

||||

- libjasper-dev

|

||||

- libdc1394-22-dev

|

||||

- xvfb

|

||||

|

||||

before_install:

|

||||

- ./travis_build_opencv.sh

|

||||

- export PKG_CONFIG_PATH=$(pkg-config --variable pc_path pkg-config):$HOME/usr/lib/pkgconfig

|

||||

- export INCLUDE_PATH=$HOME/usr/include:${INCLUDE_PATH}

|

||||

- export LD_LIBRARY_PATH=$HOME/usr/lib:${LD_LIBRARY_PATH}

|

||||

- sudo ln /dev/null /dev/raw1394

|

||||

- export DISPLAY=:99.0

|

||||

- sh -e /etc/init.d/xvfb start

|

||||

|

||||

before_cache:

|

||||

- rm -f $HOME/fresh-cache

|

||||

|

||||

script:

|

||||

- export GOCV_CAFFE_TEST_FILES="${HOME}/testdata"

|

||||

- export GOCV_TENSORFLOW_TEST_FILES="${HOME}/testdata"

|

||||

- export OPENCV_ENABLE_NONFREE=ON

|

||||

- echo "Ensuring code is well formatted"; ! gofmt -s -d . | read

|

||||

- go test -v -coverprofile=coverage.txt -covermode=atomic -tags matprofile .

|

||||

- go test -tags matprofile ./contrib -coverprofile=contrib.txt -covermode=atomic; cat contrib.txt >> coverage.txt; rm contrib.txt;

|

||||

|

||||

after_success:

|

||||

- bash <(curl -s https://codecov.io/bash)

|

||||

|

||||

# Caching so the next build will be fast as possible.

|

||||

cache:

|

||||

timeout: 1000

|

||||

directories:

|

||||

- $HOME/usr

|

||||

- $HOME/testdata

|

||||

716

vendor/gocv.io/x/gocv/CHANGELOG.md

generated

vendored

Normal file

716

vendor/gocv.io/x/gocv/CHANGELOG.md

generated

vendored

Normal file

@ -0,0 +1,716 @@

|

||||

0.22.0

|

||||

---

|

||||

* **bgsegm**

|

||||

* Add BackgroundSubtractorCNT

|

||||

* **calib3d**

|

||||

* Added undistort function (#520)

|

||||

* **core**

|

||||

* add functions (singular value decomposition, multiply between matrices, transpose matrix) (#559)

|

||||

* Add new funcs (#578)

|

||||

* add setIdentity() method to Mat

|

||||

* add String method (#552)

|

||||

* MatType: add missing constants

|

||||

* **dnn**

|

||||

* Adding GetLayerNames()

|

||||

* respect the bit depth of the input image to set the expected output when converting an image to a blob

|

||||

* **doc**

|

||||

* change opencv version 3.x to 4.x

|

||||

* **docker**

|

||||

* use Go1.13.5 for image

|

||||

* **imgcodecs**

|

||||

* Fix webp image decode error (#523)

|

||||

imgcodecs: optimize copy of data used for IMDecode method

|

||||

* **imgproc**

|

||||

* Add GetRectSubPix

|

||||

* Added ClipLine

|

||||

* Added InvertAffineTransform

|

||||

* Added LinearPolar function (#524)

|

||||

* correct ksize param used for MedianBlur unit test

|

||||

* Feature/put text with line type (#527)

|

||||

* FitEllipse

|

||||

* In FillPoly and DrawContours functions, remove func() wrap to avoid memory freed before calling opencv functions. (#543)

|

||||

* **objdetect**

|

||||

* Add support QR codes

|

||||

* **opencv**

|

||||

* update to OpenCV 4.2.0 release

|

||||

* **openvino**

|

||||

* Add openvino async

|

||||

* **test**

|

||||

* Tolerate imprecise result in SolvePoly

|

||||

* Tolerate imprecision in TestHoughLines

|

||||

|

||||

0.21.0

|

||||

---

|

||||

* **build**

|

||||

* added go clean --cache to clean target, see issue 458

|

||||

* **core**

|

||||

* Add KMeans function

|

||||

* added MeanWithMask function for Mats (#487)

|

||||

* Fix possible resource leak

|

||||

* **cuda**

|

||||

* added cudaoptflow

|

||||

* added NewGpuMatFromMat which creates a GpuMat from a Mat

|

||||

* Support for CUDA Image Warping (#494)

|

||||

* **dnn**

|

||||

* add BlobFromImages (#467)

|

||||

* add ImagesFromBlob (#468)

|

||||

* **docs**

|

||||

* update ROADMAP with all recent contributions. Thank you!

|

||||

* **examples**

|

||||

* face detection from image url by using IMDecode (#499)

|

||||

* better format

|

||||

* **imgproc**

|

||||

* Add calcBackProject

|

||||

* Add CompareHist

|

||||

* Add DistanceTransform and Watershed

|

||||

* Add GrabCut

|

||||

* Add Integral

|

||||

* Add MorphologyExWithParams

|

||||

* **opencv**

|

||||

* update to version 4.1.2

|

||||

* **openvino**

|

||||

* updates needed for 2019 R3

|

||||

* **videoio**

|

||||

* Added ToCodec to convert FOURCC string to numeric representation (#485)

|

||||

|

||||

0.20.0

|

||||

---

|

||||

* **build**

|

||||

* Use Go 1.12.x for build

|

||||

* Update to OpenCV 4.1.0

|

||||

* **cuda**

|

||||

* Initial cuda implementation

|

||||

* **docs**

|

||||

* Fix the command to install xquartz via brew/cask

|

||||

* **features2d**

|

||||

* Add support for SimpleBlobDetectorParams (#434)

|

||||

* Added FastFeatureDetectorWithParams

|

||||

* **imgproc**

|

||||

* Added function call to cv::morphologyDefaultBorderValue

|

||||

* **test**

|

||||

* Increase test coverage for FP16BlobFromImage()

|

||||

* **video**

|

||||

* Added calcOpticalFlowPyrLKWithParams

|

||||

* Addition of MOG2/KNN constructor with options

|

||||

|

||||

0.19.0

|

||||

---

|

||||

* **build**

|

||||

* Adds Dockerfile. Updates Makefile and README.

|

||||

* make maintainer tag same as dockerhub organization name

|

||||

* make sure to run tests for non-free contrib algorithms

|

||||

* update Appveyor build to use Go 1.12

|

||||

* **calib3d**

|

||||

* add func InitUndistortRectifyMap (#405)

|

||||

* **cmd**

|

||||

* correct formatting of code in example

|

||||

* **core**

|

||||

* Added Bitwise Operations With Masks

|

||||

* update to OpenCV4.0.1

|

||||

* **dnn**

|

||||

* add new backend and target types for NVIDIA and FPGA

|

||||

* Added blobFromImages in ROADMAP.md (#403)

|

||||

* Implement dnn methods for loading in-memory models.

|

||||

* **docker**

|

||||

* update Dockerfile to use OpenCV 4.0.1

|

||||

* **docs**

|

||||

* update ROADMAP from recent contributions

|

||||

* **examples**

|

||||

* Fixing filename in caffe-classifier example

|

||||

* **imgproc**

|

||||

* Add 'MinEnclosingCircle' function

|

||||

* added BoxPoints function and BorderIsolated const

|

||||

* Added Connected Components

|

||||

* Added the HoughLinesPointSet function.

|

||||

* Implement CLAHE to imgproc

|

||||

* **openvino**

|

||||

* remove lib no longer included during non-FPGA installations

|

||||

* **test**

|

||||

* Add len(kp) == 232 to TestMSER, seems this is necessary for MacOS for some reason.

|

||||

|

||||

0.18.0

|

||||

---

|

||||

* **build**

|

||||

* add OPENCV_GENERATE_PKGCONFIG flag to generate pkg-config file

|

||||

* Add required curl package to the RPM and DEBS

|

||||

* correct name for zip directory used for code download

|

||||

* Removing linking against face contrib module

|

||||

* update CI to use 4.0.0 release

|

||||

* update Makefile and Windows build command file to OpenCV 4.0.0

|

||||

* use opencv4 file for pkg-config

|

||||

* **core**

|

||||

* add ScaleAdd() method to Mat

|

||||

* **docs**

|

||||

* replace OpenCV 3.4.3 references with OpenCV 4

|

||||

* update macOS installation info to refer to new OpenCV 4.0 brew

|

||||

* Updated function documentation with information about errors.

|

||||

* **examples**

|

||||

* Improve accuracy in hand gesture sample

|

||||

* **features2d**

|

||||

* update drawKeypoints() to use new stricter enum

|

||||

* **openvino**

|

||||

* changes to accommodate release 2018R4

|

||||

* **profile**

|

||||

* add build tag matprofile to allow for conditional inclusion of custom profile

|

||||

* Add Mat profile wrapper in other areas of the library.

|

||||

* Add MatProfile.

|

||||

* Add MatProfileTest.

|

||||

* move MatProfile tests into separate test file so they only run when custom profiler active

|

||||

* **test**

|

||||

* Close images in tests.

|

||||

* More Closes in tests.

|

||||

* test that we are using 4.0.x version now

|

||||

* **videoio**

|

||||

* Return the right type and error when opening VideoCapture fails

|

||||

|

||||

0.17.0

|

||||

---

|

||||

* **build**

|

||||

* Update Makefile

|

||||

* update version of OpenCV used to 3.4.3

|

||||

* use link to OpenCV 3.4.3 for Windows builds

|

||||

* **core**

|

||||

* add mulSpectrums wrapper

|

||||

* add PolarToCart() method to Mat

|

||||

* add Reduce() method to Mat

|

||||

* add Repeat() method to Mat

|

||||

* add Solve() method to Mat

|

||||

* add SolveCubic() method to Mat

|

||||

* add SolvePoly() method to Mat

|

||||

* add Sort() method to Mat

|

||||

* add SortIdx() method to Mat

|

||||

* add Trace() method to Mat

|

||||

* Added new MatType

|

||||

* Added Phase function

|

||||

* **dnn**

|

||||

* update test to match OpenCV 3.4.3 behavior

|

||||

* **docs**

|

||||

* Add example of how to run individual test

|

||||

* adding instructions for installing pkgconfig for macOS

|

||||

* fixed GOPATH bug.

|

||||

* update ROADMAP from recent contributions

|

||||

* **examples**

|

||||

* add condition to handle no circle found in circle detection example

|

||||

* **imgcodecs**

|

||||

* Added IMEncodeWithParams function

|

||||

* **imgproc**

|

||||

* Added Filter2D function

|

||||

* Added fitLine function

|

||||

* Added logPolar function

|

||||

* Added Remap function

|

||||

* Added SepFilter2D function

|

||||

* Added Sobel function

|

||||

* Added SpatialGradient function

|

||||

* **xfeatures2d**

|

||||

* do not run SIFT test unless OpenCV was built using OPENCV_ENABLE_NONFREE

|

||||

* do not run SURF test unless OpenCV was built using OPENCV_ENABLE_NONFREE

|

||||

|

||||

0.16.0

|

||||

---

|

||||

* **build**

|

||||

* add make task for Raspbian install with ARM hardware optimizations

|

||||

* use all available cores to compile OpenCV on Windows as discussed in issue #275

|

||||

* download performance improvements for OpenCV installs on Windows

|

||||

* correct various errors and issues with OpenCV installs on Fedora and CentOS

|

||||

* **core**

|

||||

* correct spelling error in constant to fix issue #269

|

||||

* implemented & added test for Mat.SetTo

|

||||

* improve Multiply() GoDoc and test showing Scalar() multiplication

|

||||

* mutator functions for Mat add, subtract, multiply, and divide for uint8 and float32 values.

|

||||

* **dnn**

|

||||

* add FP16BlobFromImage() function to convert an image Mat to a half-float aka FP16 slice of bytes

|

||||

* **docs**

|

||||

* fix a varible error in example code in README

|

||||

|

||||

0.15.0

|

||||

---

|

||||

* **build**

|

||||

* add max to make -j

|

||||

* improve path for Windows to use currently configured GOPATH

|

||||

* **core**

|

||||

* Add Mat.DataPtr methods for direct access to OpenCV data

|

||||

* Avoid extra copy in Mat.ToBytes + code review feedback

|

||||

* **dnn**

|

||||

* add test coverage for ParseNetBackend and ParseNetTarget

|

||||

* complete test coverage

|

||||

* **docs**

|

||||

* minor cleanup of language for install

|

||||

* use chdir instead of cd in Windows instructions

|

||||

* **examples**

|

||||

* add 'hello, video' example to repo

|

||||

* add HoughLinesP example

|

||||

* correct message on device close to match actual event

|

||||

* small change in display message for when file is input source

|

||||

* use DrawContours in motion detect example

|

||||

* **imgproc**

|

||||

* Add MinAreaRect() function

|

||||

* **test**

|

||||

* filling test coverage gaps

|

||||

* **videoio**

|

||||

* add test coverage for OpenVideoCapture

|

||||

|

||||

0.14.0

|

||||

---

|

||||

* **build**

|

||||

* Add -lopencv_calib3d341 to the linker

|

||||

* auto-confirm on package installs from make deps command

|

||||

* display PowerShell download status for OpenCV files

|

||||

* obtain caffe test config file from new location in Travis build

|

||||

* remove VS only dependencies from OpenCV build, copy caffe test config file from new location

|

||||

* return back to GoCV directory after OpenCV install

|

||||

* update for release of OpenCV v3.4.2

|

||||

* use PowerShell for scripted OpenCV install for Windows

|

||||

* win32 version number has not changed yet

|

||||

* **calib3d**

|

||||

* Add Calibrate for Fisheye model(WIP)

|

||||

* **core**

|

||||

* add GetTickCount function

|

||||

* add GetTickFrequency function

|

||||

* add Size() and FromPtr() methods to Mat

|

||||

* add Total method to Mat

|

||||

* Added RotateFlag type

|

||||

* correct CopyTo to use pointer to Mat as destination

|

||||

* functions converting Image to Mat

|

||||

* rename implementation to avoid conflicts with Windows

|

||||

* stricter use of reflect.SliceHeader

|

||||

* **dnn**

|

||||

* add backend/device options to caffe and tensorflow DNN examples

|

||||

* add Close to Layer

|

||||

* add first version of dnn-pose-detection example

|

||||

* add further comments to object detection/tracking DNN example

|

||||

* add GetPerfProfile function to Net

|

||||

* add initial Layer implementation alongside enhancements to Net

|

||||

* add InputNameToIndex to Layer

|

||||

* add new functions allowing DNN backends such as OpenVINO

|

||||

* additional refactoring and comments in dnn-pose-detection example

|

||||

* cleanup DNN face detection example

|

||||

* correct const for device targets to be called Target

|

||||

* correct test that expected init slice with blank entries

|

||||

* do not init slice with blank entries, since added via append

|

||||

* further cleanup of DNN face detection example

|

||||

* make dnn-pose-detection example use Go channels for async operation

|

||||

* refactoring and additional comments for object detection/tracking DNN example

|

||||

* refine comment in header for style transfer example

|

||||

* working style transfer example

|

||||

* added ForwardLayers() to accomodate models with multiple output layers

|

||||

* **docs**

|

||||

* add scripted Windows install info to README

|

||||

* Added a sample gocv workflow contributing guideline

|

||||

* mention docker image in README.

|

||||

* mention work in progress on Android

|

||||

* simplify and add missing step in Linux installation in README

|

||||

* update contributing instructions to match latest version

|

||||

* update ROADMAP from recent calib3d module contribution

|

||||

* update ROADMAP from recent imgproc histogram contribution

|

||||

* **examples**

|

||||

* cleanup header for caffe dnn classifier

|

||||

* show how to use either Caffe or Tensorflow for DNN object detection

|

||||

* further improve dnn samples

|

||||

* rearrange and add comments to dnn style transfer example

|

||||

* remove old copy of pose detector

|

||||

* remove unused example

|

||||

* **features2d**

|

||||

* free memory allocation bug for C.KeyPoints as pointed out by @tzununbekov

|

||||

* Adding opencv::drawKeypoints() support

|

||||

* **imgproc**

|

||||

* add equalizeHist function

|

||||

* Added opencv::calcHist implementation

|

||||

* **openvino**

|

||||

* add needed environment config to execute examples

|

||||

* further details in README explaining how to use

|

||||

* remove opencv contrib references as they are not included in OpenVINO

|

||||

* **videoio**

|

||||

* Add OpenVideoCapture

|

||||

* Use gocv.VideoCaptureFile if string is specified for device.

|

||||

|

||||

0.13.0

|

||||

---

|

||||

* **build**

|

||||

* Add cgo directives to contrib

|

||||

* contrib subpackage also needs cpp 11 or greater for a warning free build on Linux

|

||||

* Deprecate env scripts and update README

|

||||

* Don't set --std=c++1z on non-macOS

|

||||

* Remove CGO vars from CI and correct Windows cgo directives

|

||||

* Support pkg-config via cgo directives

|

||||

* we actually do need cpp 11 or greater for a warning free build on Linux

|

||||

* **docs**

|

||||

* add a Github issue template to project

|

||||

* provide specific examples of using custom environment

|

||||

* **imgproc**

|

||||

* add HoughLinesPWithParams() function

|

||||

* **openvino**

|

||||

* add build tag specific to openvino

|

||||

* add roadmap info

|

||||

* add smoke test for ie

|

||||

|

||||

0.12.0

|

||||

---

|

||||

* **build**

|

||||

* convert to CRLF

|

||||

* Enable verbosity for travisCI

|

||||

* Further improvements to Makefile

|

||||

* **core**

|

||||

* Add Rotate, VConcat

|

||||

* Adding InScalarRange and NewMatFromScalarWithSize functions

|

||||

* Changed NewMatFromScalarWithSize to NewMatWithSizeFromScalar

|

||||

* implement CheckRange(), Determinant(), EigenNonSymmetric(), Min(), and MinMaxIdx() functions

|

||||

* implement PerspectiveTransform() and Sqrt() functions

|

||||

* implement Transform() and Transpose() functions

|

||||

* Make toByteArray safe for empty byte slices

|

||||

* Renamed InScalarRange to InRangeWithScalar

|

||||

* **docs**

|

||||

* nicer error if we can't read haarcascade_frontalface_default

|

||||

* correct some ROADMAP links

|

||||

* Fix example command.

|

||||

* Fix executable name in help text.

|

||||

* update ROADMAP from recent contributions

|

||||

* **imgproc**

|

||||

* add BoxFilter and SqBoxFilter functions

|

||||

* Fix the hack to convert C arrays to Go slices.

|

||||

* **videoio**

|

||||

* Add isColor to VideoWriterFile

|

||||

* Check numerical parameters for gocv.VideoWriterFile

|

||||

* CodecString()

|

||||

* **features2d**

|

||||

* add BFMatcher

|

||||

* **img_hash**

|

||||

* Add contrib/img_hash module

|

||||

* add GoDocs for new img_hash module

|

||||

* Add img-similarity as an example for img_hash

|

||||

* **openvino**

|

||||

* adds support for Intel OpenVINO toolkit PVL

|

||||

* starting experimental work on OpenVINO IE

|

||||

* update README files for Intel OpenVINO toolkit support

|

||||

* WIP on IE can load an IR network

|

||||

|

||||

0.11.0

|

||||

---

|

||||

* **build**

|

||||

* Add astyle config

|

||||

* Astyle cpp/h files

|

||||

* remove duplication in Makefile for astyle

|

||||

* **core**

|

||||

* Add GetVecfAt() function to Mat

|

||||

* Add GetVeciAt() function to Mat

|

||||

* Add Mat.ToImage()

|

||||

* add MeanStdDev() method to Mat

|

||||

* add more functions

|

||||

* Compare Mat Type directly

|

||||

* further cleanup for GoDocs and enforce type for convariance operations

|

||||

* Make borderType in CopyMakeBorder be type BorderType

|

||||

* Mat Type() should return MatType

|

||||

* remove unused convenience functions

|

||||

* use Mat* to indicate when a Mat is mutable aka an output parameter

|

||||

* **dnn**

|

||||

* add a ssd sample and a GetBlobChannel helper

|

||||

* added another helper func and a pose detection demo

|

||||

* **docs**

|

||||

* add some additional detail about adding OpenCV functions to GoCV

|

||||

* updates to contribution guidelines

|

||||

* fill out complete list of needed imgproc functions for sections that have work started

|

||||

* indicate that missing imgproc functions need implementation

|

||||

* mention the WithParams patterns to be used for functions with default params

|

||||

* update README for the Mat* based API changes

|

||||

* update ROADMAP for recent changes especially awesome recent core contributions from @berak

|

||||

* **examples**

|

||||

* Fix tf-classifier example

|

||||

* move new DNN advanced examples into separate folders

|

||||

* Update doc for the face contrib package

|

||||

* Update links in caffe-classifier demo

|

||||

* WIP on hand gestures tracking example

|

||||

* **highgui**

|

||||

* fix constant in NewWindow

|

||||

* **imgproc**

|

||||

* Add Ellipse() and FillPoly() functions

|

||||

* Add HoughCirclesWithParams() func

|

||||

* correct output Mat to for ConvexHull()

|

||||

* rename param being used for Mat image to be modified

|

||||

* **tracking**

|

||||

* add support for TrackerMIL, TrackerBoosting, TrackerMedianFlow, TrackerTLD, TrackerKCF, TrackerMOSSE, TrackerCSRT trackers

|

||||

* removed mutitracker, added Csrt, rebased

|

||||

* update GoDocs and minor renaming based on gometalint output

|

||||

|

||||

0.10.0

|

||||

---

|

||||

* **build**

|

||||

* install unzip before build

|

||||

* overwrite when unzipping file to install Tensorflow test model

|

||||

* use -DCPU_DISPATCH= flag for build to avoid problem with disabled AVX on Windows

|

||||

* update unzipped file when installing Tensorflow test model

|

||||

* **core**

|

||||

* add Compare() and CountNonZero() functions

|

||||

* add getter/setter using optional params for multi-dimensional Mat using row/col/channel

|

||||

* Add mat subtract function

|

||||

* add new toRectangle function to DRY up conversion from CRects to []image.Rectangle

|

||||

* add split subtract sum wrappers

|

||||

* Add toCPoints() helper function

|

||||

* Added Mat.CopyToWithMask() per #47

|

||||

* added Pow() method

|

||||

* BatchDistance BorderInterpolate CalcCovarMatrix CartToPolar

|

||||

* CompleteSymm ConvertScaleAbs CopyMakeBorder Dct

|

||||

* divide, multiply

|

||||

* Eigen Exp ExtractChannels

|

||||

* operations on a 3d Mat are not same as a 2d multichannel Mat

|

||||

* resolve merge conflict with duplicate Subtract() function

|

||||

* run gofmt on core tests

|

||||

* Updated type for Mat.GetUCharAt() and Mat.SetUCharAt() to reflect uint8 instead of int8

|

||||

* **docs**

|

||||

* update ROADMAP of completed functions in core from recent contributions

|

||||

* **env**

|

||||

* check loading resources

|

||||

* Add distribution detection to deps rule

|

||||

* Add needed environment variables for Linux

|

||||

* **highgui**

|

||||

* add some missing test coverage on WaitKey()

|

||||

* **imgproc**

|

||||

* Add adaptive threshold function

|

||||

* Add pyrDown and pyrUp functions

|

||||

* Expose DrawContours()

|

||||

* Expose WarpPerspective and GetPerspectiveTransform

|

||||

* implement ConvexHull() and ConvexityDefects() functions

|

||||

* **opencv**

|

||||

* update to OpenCV version 3.4.1

|

||||

|

||||

0.9.0

|

||||

---

|

||||

* **bugfix**

|

||||

* correct several errors in size parameter ordering

|

||||

* **build**

|

||||

* add missing opencv_face lib reference to env.sh

|

||||

* Support for non-brew installs of opencv on Darwin

|

||||

* **core**

|

||||

* add Channels() method to Mat

|

||||

* add ConvertTo() and NewMatFromBytes() functions

|

||||

* add Type() method to Mat

|

||||

* implement ConvertFp16() function

|

||||

* **dnn**

|

||||

* use correct size for blob used for Caffe/Tensorflow tests

|

||||

* **docs**

|

||||

* Update copyright date and Apache 2.0 license to include full text

|

||||

* **examples**

|

||||

* cleanup mjpeg streamer code

|

||||

* cleanup motion detector comments

|

||||

* correct use of defer in loop

|

||||

* use correct size for blob used for Caffe/Tensorflow examples

|

||||

* **imgproc**

|

||||

* Add cv::approxPolyDP() bindings.

|

||||

* Add cv::arcLength() bindings.

|

||||

* Add cv::matchTemplate() bindings.

|

||||

* correct comment and link for Blur function

|

||||

* correct docs for BilateralFilter()

|

||||

|

||||

0.8.0

|

||||

---

|

||||

* **core**

|

||||

* add ColorMapFunctions and their test

|

||||

* add Mat ToBytes

|

||||

* add Reshape and MinMaxLoc functions

|

||||

* also delete points

|

||||

* fix mistake in the norm function by taking NormType instead of int as parameter

|

||||

* SetDoubleAt func and his test

|

||||

* SetFloatAt func and his test

|

||||

* SetIntAt func and his test

|

||||

* SetSCharAt func and his test

|

||||

* SetShortAt func and his test

|

||||

* SetUCharAt fun and his test

|

||||

* use correct delete operator for array of new, eliminates a bunch of memory leaks

|

||||

* **dnn**

|

||||

* add support for loading Tensorflow models

|

||||

* adjust test for Caffe now that we are auto-cropping blob

|

||||

* first pass at adding Caffe support

|

||||

* go back to older function signature to avoid version conflicts with Intel CV SDK

|

||||

* properly close DNN Net class

|

||||

* use approx. value from test result to account forr windows precision differences

|

||||

* **features2d**

|

||||

* implement GFTTDetector, KAZE, and MSER algorithms

|

||||

* modify MSER test for Windows results

|

||||

* **highgui**

|

||||

* un-deprecate WaitKey function needed for CLI apps

|

||||

* **imgcodec**

|

||||

* add fileExt type

|

||||

* **imgproc**

|

||||

* add the norm wrapper and use it in test for WarpAffine and WarpAffineWithParams

|

||||

* GetRotationMatrix2D, WarpAffine and WarpAffineWithParams

|

||||

* use NormL2 in wrap affine

|

||||

* **pvl**

|

||||

* add support for FaceRecognizer

|

||||

* complete wrappers for all missing FaceDetector functions

|

||||

* update instructions to match R3 of Intel CV SDK

|

||||

* **docs**

|

||||

* add more detail about exactly which functions are not yet implememented in the modules that are marked as 'Work Started'

|

||||

* add refernece to Tensorflow example, and also suggest brew upgrade for MacOS

|

||||

* improve ROADMAP to help would-be contributors know where to get started

|

||||

* in the readme, explain compiling to a static library

|

||||

* remove many godoc warnings by improving function descriptions

|

||||

* update all OpenCV 3.3.1 references to v3.4.0

|

||||

* update CGO_LDFLAGS references to match latest requirements

|

||||

* update contribution guidelines to try to make it more inviting

|

||||

* **examples**

|

||||

* add Caffe classifier example

|

||||

* add Tensorflow classifier example

|

||||

* fixed closing window in examples in infinite loop

|

||||

* fixed format of the examples with gofmt

|

||||

* **test**

|

||||

* add helper function for test : floatEquals

|

||||

* add some attiribution from test function

|

||||

* display OpenCV version in case that test fails

|

||||

* add round function to allow for floating point accuracy differences due to GPU usage.

|

||||

* **build**

|

||||

* improve search for already installed OpenCV on MacOS

|

||||

* update Appveyor build to Opencv 3.4.0

|

||||

* update to Opencv 3.4.0

|

||||

|

||||

0.7.0

|

||||

---

|

||||

* **core**

|

||||

* correct Merge implementation

|

||||

* **docs**

|

||||

* change wording and formatting for roadmap

|

||||

* update roadmap for a more complete list of OpenCV functionality

|

||||

* sequence docs in README in same way as the web site, aka by OS

|

||||

* show in README that some work was done on contrib face module

|

||||

* **face**

|

||||

* LBPH facerecognizer bindings

|

||||

* **highgui**

|

||||

* complete implementation for remaining API functions

|

||||

* **imgcodecs**

|

||||

* add IMDecode function

|

||||

* **imgproc**

|

||||

* elaborate on HoughLines & HoughLinesP tests to fetch a few individual results

|

||||

* **objdetect**

|

||||

* add GroupRectangles function

|

||||

* **xfeatures2d**

|

||||

* add SIFT and SURF algorithms from OpenCV contrib

|

||||

* improve description for OpenCV contrib

|

||||

* run tests from OpenCV contrib

|

||||

|

||||

0.6.0

|

||||

---

|

||||

* **core**

|

||||

* Add cv::LUT binding

|

||||

* **examples**

|

||||

* do not try to go fullscreen, since does not work on OSX

|

||||

* **features2d**

|

||||

* add AKAZE algorithm

|

||||

* add BRISK algorithm

|

||||

* add FastFeatureDetector algorithm

|

||||

* implement AgastFeatureDetector algorithm

|

||||

* implement ORB algorithm

|

||||

* implement SimpleBlobDetector algorithm

|

||||

* **osx**

|

||||

* Fix to get the OpenCV path with "brew info".

|

||||

* **highgui**

|

||||

* use new Window with thread lock, and deprecate WaitKey() in favor of Window.WaitKey()

|

||||

* use Window.WaitKey() in tests

|

||||

* **imgproc**

|

||||

* add tests for HoughCircles

|

||||

* **pvl**

|

||||

* use correct Ptr referencing

|

||||

* **video**

|

||||

* use smart Ptr for Algorithms thanks to @alalek

|

||||

* use unsafe.Pointer for Algorithm

|

||||

* move tests to single file now that they all pass

|

||||

|

||||

0.5.0

|

||||

---

|

||||

* **core**

|

||||

* add TermCriteria for iterative algorithms

|

||||

* **imgproc**

|

||||

* add CornerSubPix() and GoodFeaturesToTrack() for corner detection

|

||||

* **objdetect**

|

||||

* add DetectMultiScaleWithParams() for HOGDescriptor

|

||||

* add DetectMultiScaleWithParams() to allow override of defaults for CascadeClassifier

|

||||

* **video**

|

||||

* add CalcOpticalFlowFarneback() for Farneback optical flow calculations

|

||||

* add CalcOpticalFlowPyrLK() for Lucas-Kanade optical flow calculations

|

||||

* **videoio**

|

||||

* use temp directory for Windows test compat.

|

||||

* **build**

|

||||

* enable Appveyor build w/cache

|

||||

* **osx**

|

||||

* update env path to always match installed OpenCV from Homebrew

|

||||

|

||||

0.4.0

|

||||

---

|

||||

* **core**

|

||||

* Added cv::mean binding with single argument

|

||||

* fix the write-strings warning

|

||||

* return temp pointer fix

|

||||

* **examples**

|

||||

* add counter example

|

||||

* add motion-detect command

|

||||

* correct counter

|

||||

* remove redundant cast and other small cleanup

|

||||

* set motion detect example to fullscreen

|

||||

* use MOG2 for continous motion detection, instead of simplistic first frame only

|

||||

* **highgui**

|

||||

* ability to better control the fullscreen window

|

||||

* **imgproc**

|

||||

* add BorderType param type for GaussianBlur

|

||||

* add BoundingRect() function

|

||||

* add ContourArea() function

|

||||

* add FindContours() function along with associated data types

|

||||

* add Laplacian and Scharr functions

|

||||

* add Moments() function

|

||||

* add Threshold function

|

||||

* **pvl**

|

||||

* add needed lib for linker missing in README

|

||||

* **test**

|

||||

* slightly more permissive version test

|

||||

* **videoio**

|

||||

* Add image compression flags for gocv.IMWrite

|

||||

* Fixed possible looping out of compression parameters length

|

||||

* Make dedicated function to run cv::imwrite with compression parameters

|

||||

|

||||

0.3.1

|

||||

---

|

||||

* **overall**

|

||||

* Update to use OpenCV 3.3.1

|

||||

|

||||

0.3.0

|

||||

---

|

||||

* **docs**

|

||||

* Correct Windows build location from same @jpfarias fix to gocv-site

|

||||

* **core**

|

||||

* Add Resize

|

||||

* Add Mat merge and Discrete Fourier Transform

|

||||

* Add CopyTo() and Normalize()

|

||||

* Implement various important Mat logical operations

|

||||

* **video**

|

||||

* BackgroundSubtractorMOG2 algorithm now working

|

||||

* Add BackgroundSubtractorKNN algorithm from video module

|

||||

* **videoio**

|

||||

* Add VideoCapture::get

|

||||

* **imgproc**

|

||||

* Add BilateralFilter and MedianBlur

|

||||

* Additional drawing functions implemented

|

||||

* Add HoughCircles filter

|

||||

* Implement various morphological operations

|

||||

* **highgui**

|

||||

* Add Trackbar support

|

||||

* **objdetect**

|

||||

* Add HOGDescriptor

|

||||

* **build**

|

||||

* Remove race from test on Travis, since it causes CGo segfault in MOG2

|

||||

|

||||

0.2.0

|

||||

---

|

||||

* Switchover to custom domain for package import

|

||||

* Yes, we have Windows

|

||||

|

||||

0.1.0

|

||||

---

|

||||

Initial release!

|

||||

|

||||

- [X] Video capture

|

||||

- [X] GUI Window to display video

|

||||

- [X] Image load/save

|

||||

- [X] CascadeClassifier for object detection/face tracking/etc.

|

||||

- [X] Installation instructions for Ubuntu

|

||||

- [X] Installation instructions for OS X

|

||||

- [X] Code example to use VideoWriter

|

||||

- [X] Intel CV SDK PVL FaceTracker support

|

||||

- [X] imgproc Image processing

|

||||

- [X] Travis CI build

|

||||

- [X] At least minimal test coverage for each OpenCV class

|

||||

- [X] Implement more of imgproc Image processing

|

||||

136

vendor/gocv.io/x/gocv/CONTRIBUTING.md

generated

vendored

Normal file

136

vendor/gocv.io/x/gocv/CONTRIBUTING.md

generated

vendored

Normal file

@ -0,0 +1,136 @@

|

||||

# How to contribute

|

||||

|

||||

Thank you for your interest in improving GoCV.

|

||||

|

||||

We would like your help to make this project better, so we appreciate any contributions. See if one of the following descriptions matches your situation:

|

||||

|

||||

### Newcomer to GoCV, to OpenCV, or to computer vision in general

|

||||

|

||||

We'd love to get your feedback on getting started with GoCV. Run into any difficulty, confusion, or anything else? You are not alone. We want to know about your experience, so we can help the next people. Please open a Github issue with your questions, or get in touch directly with us.

|

||||

|

||||

### Something in GoCV is not working as you expect

|

||||

|

||||

Please open a Github issue with your problem, and we will be happy to assist.

|

||||

|

||||

### Something you want/need from OpenCV does not appear to be in GoCV

|

||||

|

||||

We probably have not implemented it yet. Please take a look at our [ROADMAP.md](ROADMAP.md). Your pull request adding the functionality to GoCV would be greatly appreciated.

|

||||

|

||||

### You found some Python code on the Internet that performs some computer vision task, and you want to do it using GoCV

|

||||

|

||||

Please open a Github issue with your needs, and we can see what we can do.

|

||||

|

||||

## How to use our Github repository

|

||||

|

||||

The `master` branch of this repo will always have the latest released version of GoCV. All of the active development work for the next release will take place in the `dev` branch. GoCV will use semantic versioning and will create a tag/release for each release.

|

||||

|

||||

Here is how to contribute back some code or documentation:

|

||||

|

||||

- Fork repo

|

||||

- Create a feature branch off of the `dev` branch

|

||||

- Make some useful change

|

||||

- Submit a pull request against the `dev` branch.

|

||||

- Be kind

|

||||

|

||||

## How to add a function from OpenCV to GoCV

|

||||

|

||||

Here are a few basic guidelines on how to add a function from OpenCV to GoCV:

|

||||

|

||||

- Please open a Github issue. We want to help, and also make sure that there is no duplications of efforts. Sometimes what you need is already being worked on by someone else.

|

||||

- Use the proper Go style naming `MissingFunction()` for the Go wrapper.

|

||||

- Make any output parameters `Mat*` to indicate to developers that the underlying OpenCV data will be changed by the function.

|

||||

- Use Go types when possible as parameters for example `image.Point` and then convert to the appropriate OpenCV struct. Also define a new type based on `int` and `const` values instead of just passing "magic numbers" as params. For example, the `VideoCaptureProperties` type used in `videoio.go`.

|

||||

- Always add the function to the GoCV file named the same as the OpenCV module to which the function belongs.

|

||||

- If the new function is in a module that is not yet implemented by GoCV, a new set of files for that module will need to be added.

|

||||

- Always add a "smoke" test for the new function being added. We are not testing OpenCV itself, but just the GoCV wrapper, so all that is needed generally is just exercising the new function.

|

||||

- If OpenCV has any default params for a function, we have been implementing 2 versions of the function since Go does not support overloading. For example, with a OpenCV function:

|

||||

|

||||

```c

|

||||

opencv::xYZ(int p1, int p2, int p3=2, int p4=3);

|

||||

```

|

||||

|

||||

We would define 2 functions in GoCV:

|

||||

|

||||

```go

|

||||

// uses default param values

|

||||

XYZ(p1, p2)

|

||||

|

||||

// sets each param

|

||||

XYZWithParams(p2, p2, p3, p4)

|

||||

```

|

||||

|

||||

## How to run tests

|

||||

|

||||

To run the tests:

|

||||

|

||||

```

|

||||

go test .

|

||||

go test ./contrib/.

|

||||

```

|

||||

|

||||

If you want to run an individual test, you can provide a RegExp to the `-run` argument:

|

||||

```

|

||||

go test -run TestMat

|

||||

```

|

||||

|

||||

If you are using Intel OpenVINO, you can run those tests using:

|

||||

|

||||

```

|

||||

go test ./openvino/...

|

||||

```

|

||||

|

||||

## Contributing workflow

|

||||

|

||||

This section provides a short description of one of many possible workflows you can follow to contribute to `CoCV`. This workflow is based on multiple [git remotes](https://git-scm.com/docs/git-remote) and it's by no means the only workflow you can use to contribute to `GoCV`. However, it's an option that might help you get started quickly without too much hassle as this workflow lets you work off the `gocv` repo directory path!

|

||||

|

||||

Assuming you have already forked the `gocv` repo, you need to add a new `git remote` which will point to your GitHub fork. Notice below that you **must** `cd` to `gocv` repo directory before you add the new `git remote`:

|

||||

|

||||

```shell

|

||||

cd $GOPATH/src/gocv.io/x/gocv

|

||||

git remote add gocv-fork https://github.com/YOUR_GH_HANDLE/gocv.git

|

||||

```

|

||||

|

||||

Note, that in the command above we called our new `git remote`, **gocv-fork** for convenience so we can easily recognize it. You are free to choose any remote name of your liking.

|

||||

|

||||

You should now see your new `git remote` when running the command below:

|

||||

|

||||

```shell

|

||||

git remote -v

|

||||

|

||||

gocv-fork https://github.com/YOUR_GH_HANDLE/gocv.git (fetch)

|

||||

gocv-fork https://github.com/YOUR_GH_HANDLE/gocv.git (push)

|

||||

origin https://github.com/hybridgroup/gocv (fetch)

|

||||

origin https://github.com/hybridgroup/gocv (push)

|

||||

```

|

||||

|

||||

Before you create a new branch from `dev` you should fetch the latests commits from the `dev` branch:

|

||||

|

||||

```shell

|

||||

git fetch origin dev

|

||||

```

|

||||

|

||||

You want the `dev` branch in your `gocv` fork to be in sync with the `dev` branch of `gocv`, so push the earlier fetched commits to your GitHub fork as shown below. Note, the `-f` force switch might not be needed:

|

||||

|

||||

```shell

|

||||

git push gocv-fork dev -f

|

||||

```

|

||||

|

||||

Create a new feature branch from `dev`:

|

||||

|

||||

```shell

|

||||

git checkout -b new-feature

|

||||

```

|

||||

|

||||

After you've made your changes you can run the tests using the `make` command listed below. Note, you're still working off the `gocv` project root directory, hence running the command below does not require complicated `$GOPATH` rewrites or whatnot:

|

||||

|

||||

```shell

|

||||

make test

|

||||

```

|

||||

|

||||

Once the tests have passed, commit your new code to the `new-feature` branch and push it to your fork running the command below:

|

||||

|

||||

```shell

|

||||

git push gocv-fork new-feature

|

||||

```

|

||||

|

||||

You can now open a new PR from `new-feature` branch in your forked repo against the `dev` branch of `gocv`.

|

||||

60

vendor/gocv.io/x/gocv/Dockerfile

generated

vendored

Normal file

60

vendor/gocv.io/x/gocv/Dockerfile

generated

vendored

Normal file

@ -0,0 +1,60 @@

|

||||

FROM ubuntu:16.04 AS opencv

|

||||

LABEL maintainer="hybridgroup"

|

||||

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends \

|

||||

git build-essential cmake pkg-config unzip libgtk2.0-dev \

|

||||

curl ca-certificates libcurl4-openssl-dev libssl-dev \

|

||||

libavcodec-dev libavformat-dev libswscale-dev libtbb2 libtbb-dev \

|

||||

libjpeg-dev libpng-dev libtiff-dev libdc1394-22-dev && \

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

|

||||

ARG OPENCV_VERSION="4.2.0"

|

||||

ENV OPENCV_VERSION $OPENCV_VERSION

|

||||

|

||||

RUN curl -Lo opencv.zip https://github.com/opencv/opencv/archive/${OPENCV_VERSION}.zip && \

|

||||

unzip -q opencv.zip && \

|

||||

curl -Lo opencv_contrib.zip https://github.com/opencv/opencv_contrib/archive/${OPENCV_VERSION}.zip && \

|

||||

unzip -q opencv_contrib.zip && \

|

||||

rm opencv.zip opencv_contrib.zip && \

|

||||

cd opencv-${OPENCV_VERSION} && \

|

||||

mkdir build && cd build && \

|

||||

cmake -D CMAKE_BUILD_TYPE=RELEASE \

|

||||

-D CMAKE_INSTALL_PREFIX=/usr/local \

|

||||

-D OPENCV_EXTRA_MODULES_PATH=../../opencv_contrib-${OPENCV_VERSION}/modules \

|

||||

-D WITH_JASPER=OFF \

|

||||

-D BUILD_DOCS=OFF \

|

||||

-D BUILD_EXAMPLES=OFF \

|

||||

-D BUILD_TESTS=OFF \

|

||||

-D BUILD_PERF_TESTS=OFF \

|

||||

-D BUILD_opencv_java=NO \

|

||||

-D BUILD_opencv_python=NO \

|

||||

-D BUILD_opencv_python2=NO \

|

||||

-D BUILD_opencv_python3=NO \

|

||||

-D OPENCV_GENERATE_PKGCONFIG=ON .. && \

|

||||

make -j $(nproc --all) && \

|

||||

make preinstall && make install && ldconfig && \

|

||||

cd / && rm -rf opencv*

|

||||

|

||||

#################

|

||||

# Go + OpenCV #

|

||||

#################

|

||||

FROM opencv AS gocv

|

||||

LABEL maintainer="hybridgroup"

|

||||

|

||||

ARG GOVERSION="1.13.5"

|

||||

ENV GOVERSION $GOVERSION

|

||||

|

||||

RUN apt-get update && apt-get install -y --no-install-recommends \

|

||||

git software-properties-common && \

|

||||

curl -Lo go${GOVERSION}.linux-amd64.tar.gz https://dl.google.com/go/go${GOVERSION}.linux-amd64.tar.gz && \

|

||||

tar -C /usr/local -xzf go${GOVERSION}.linux-amd64.tar.gz && \

|

||||

rm go${GOVERSION}.linux-amd64.tar.gz && \

|

||||

rm -rf /var/lib/apt/lists/*

|

||||

|

||||

ENV GOPATH /go

|

||||

ENV PATH $GOPATH/bin:/usr/local/go/bin:$PATH

|

||||

|

||||

RUN mkdir -p "$GOPATH/src" "$GOPATH/bin" && chmod -R 777 "$GOPATH"

|

||||

WORKDIR $GOPATH

|

||||

|

||||

RUN go get -u -d gocv.io/x/gocv && go run ${GOPATH}/src/gocv.io/x/gocv/cmd/version/main.go

|

||||

202

vendor/gocv.io/x/gocv/LICENSE.txt

generated

vendored

Normal file

202

vendor/gocv.io/x/gocv/LICENSE.txt

generated

vendored

Normal file

@ -0,0 +1,202 @@

|

||||

|

||||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright (c) 2017-2019 The Hybrid Group

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

138

vendor/gocv.io/x/gocv/Makefile

generated

vendored

Normal file

138

vendor/gocv.io/x/gocv/Makefile

generated

vendored

Normal file

@ -0,0 +1,138 @@

|

||||

.ONESHELL:

|

||||

.PHONY: test deps download build clean astyle cmds docker

|

||||

|

||||

# OpenCV version to use.

|

||||

OPENCV_VERSION?=4.2.0

|

||||

|

||||

# Go version to use when building Docker image

|

||||

GOVERSION?=1.13.1

|

||||

|

||||

# Temporary directory to put files into.

|

||||

TMP_DIR?=/tmp/

|

||||

|

||||

# Package list for each well-known Linux distribution

|

||||

RPMS=cmake curl git gtk2-devel libpng-devel libjpeg-devel libtiff-devel tbb tbb-devel libdc1394-devel unzip

|

||||

DEBS=unzip build-essential cmake curl git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libdc1394-22-dev

|

||||

|

||||

# Detect Linux distribution

|

||||

distro_deps=

|

||||

ifneq ($(shell which dnf 2>/dev/null),)

|

||||

distro_deps=deps_fedora

|

||||

else

|

||||

ifneq ($(shell which apt-get 2>/dev/null),)

|

||||

distro_deps=deps_debian

|

||||

else

|

||||

ifneq ($(shell which yum 2>/dev/null),)

|

||||

distro_deps=deps_rh_centos

|

||||

endif

|